How Industries Are Putting AI to Work

Two parallel trends underpin recent developments in generative artificial intelligence (AI): AI models are getting cheaper, but they’re also becoming more capable. As a result, the adoption of AI across industries is surging, says Alexander Duval, head of Europe Tech Hardware & Semiconductors in Goldman Sachs Research.

We interviewed Duval after Goldman Sachs’s European AI and Semis symposium, which convened 500 participants over two days in London. In his conversations, Duval says, he encountered a broader array of use cases for AI than in the previous year, with sectors as varied as healthcare, retail, manufacturing, and education now adopting AI to cut costs and improve productivity. A number of bottlenecks remain, but the symposium’s participants also highlighted several technological advances that can help overcome them, with a number of European companies playing key roles.

What was the mood at the symposium? How are people viewing the promise of generative AI today?

The mood at the symposium was positive, with participants having observed a substantial surge in adoption of generative AI. For example, it was observed that one hyperscaler has reported a fivefold increase in token usage over the last 12 months.

We saw discussions of a broader array of use cases for generative AI than the year before and a sense that it is not only seeing strong growth in Big Tech companies, but is also now able to drive more tangible benefits for business in the real economy.

What are some of these use cases?

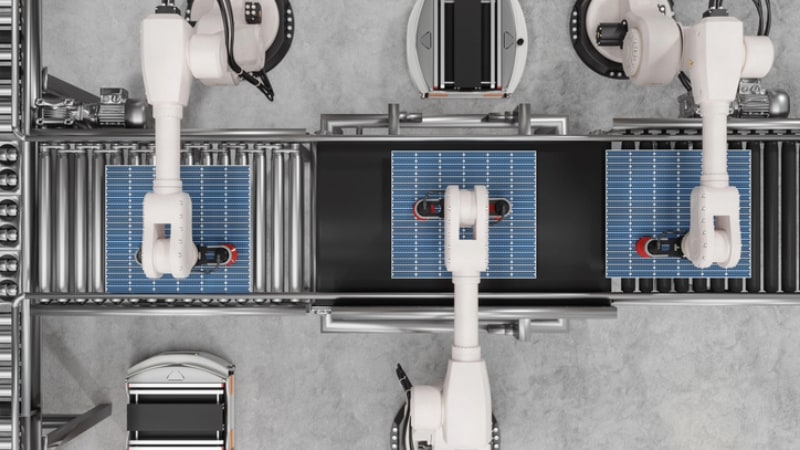

In healthcare, surgeons are using AI to solve complex problems much more efficiently using AI trained on 25 years of video data. In the industrial sector, one company at the event said it leverages thousands of autonomous robot agents equipped with AI, operating 24/7 in its facilities, leading to clear cost benefits.

Meanwhile, in retail, generative AI is powering robots that perform stock-taking and predictive analysis to prevent stock-outs and promote product uptake, and these are driving a three-to-four-times return on investment within a three- to four-month period. One company stated that it has been able to automate one week per month of human workload with AI.

How is the cost model of deploying and running generative AI systems changing?

The cost model is undergoing significant transformation, which includes the emergence of cheaper Large Language Models (LLMs). Some commonly used models, for example, have become multiple times cheaper than earlier versions for both training and inference.

That said, absolute capex spend remains high for now, as the industry is leveraging the extra compute power to train and use more sophisticated models across video, speech, images, and other modalities.

And what about the capabilities of generative AI?

Generative AI capabilities are rapidly improving. This progress is underscored by recent milestones, such as LLMs earning a gold medal in a Math Olympiad.

Multimodal systems—AI entities that can process, understand, and generate information from multiple types of data such as video or speech—have been successfully implemented in various fields. Most notably, we see them in robotics, where adoption has gained momentum in margin-sensitive industries like manufacturing, logistics, retail, and healthcare.

Robots are increasingly used in environments inaccessible to humans, such as underground or hazardous settings. Overall trends suggest that robotics will augment human labor rather than displace it, supporting collaborative use.

The term “agentic AI” came up a lot during the symposium. What exactly is agentic AI, and how is it gaining prominence?

Agentic AI operates autonomously and, crucially, takes actions to achieve specific goals, rather than simply providing answers. AI agents tend to be strong at extrapolating observed data and applying it to novel use cases. Agentic AI has progressed from executing isolated actions to managing complete workflows, including tasks such as code testing, mirroring the role of a digital employee.

Despite these advancements, some speakers saw bottlenecks to broader adoption of agentic AI. Firstly, infrastructure gaps mean agents may currently lack access to enterprise-grade data beyond the open internet. Secondly, many offerings are still in their early stages, with some major players having only recently released agentic products. Thirdly, it will take time for users to gain trust in LLM outputs.

Part of the promise of generative AI is to improve the productivity of workforces. Is that already happening? And if so, could you give us some examples of sectors that are experiencing such gains?

Yes, these productivity gains are evident in several industries, according to participants. One speaker highlighted that AI is accelerating software development by up to six times.

AI is also enhancing enterprise support functions, with one tech firm reporting that AI now handles up to 50% of workloads in this area. Leading LLMs have demonstrated the ability to outperform top programmers in certain tasks, accounting for 25% of new code lines at one hyperscaler.

The entertainment industry has also seen significant impact: Speakers highlighted that one streaming company has integrated generative AI into its visual effects pipeline, accelerating production by 10x.

That said, different industries will progress at different speeds, especially given the need for guardrails.

What are the chief bottlenecks that the industry faces in accelerating the development and use of generative AI?

One chief bottleneck is power. A speaker noted that certain data centers could require as much power as New York City, while another suggested that a 500 megawatt (MW) data center demands power levels similar to 500,000 homes. This necessitates not only new sources of power generation but also more efficient grids and increasingly thermally efficient technology within data centres.

Beyond power, guardrails are essential for AI-generated content. This requires human reviewers alongside technical safeguards such as prompt engineering, output filtering, and safety classifiers to mitigate risks. Additionally, citations, source links, and user feedback mechanisms are important for ensuring the reliability of AI output and providing a channel for continuous improvement.

And it’s crucial to establish transparency and transferability to explain the choices AI has made, as well as to develop mechanisms to avoid bias.

What technological advances in semiconductors and other hardware can help address some of the AI industry’s challenges?

Several European companies play important roles in enabling the technological advances in semiconductors and other hardware that are crucial for addressing the challenges faced by the AI industry.

Among these advancements are cutting-edge lithography tools that are vital for lowering cycle time and improving yield in the manufacturing of chips. These advancements support the increasingly aggressive roadmaps of customers wanting to underpin AI training with more power and less latency.

Another significant advance is a process called hybrid bonding, which helps create chips that consume less power and generate far less heat.

Furthermore, semiconductors made of materials such as silicon, silicon carbide, and gallium nitride are playing an increasingly meaningful role in improving power efficiency.

What does the next phase of AI development and use look like?

Speakers mentioned that the next phase could involve improvements in areas such as reasoning models, integration of quantum computing to generate a richer array of data for training models, and use of further hardware innovation to boost training and inference capabilities.

Currently, reasoning models are seen by some participants as slower and more compute-intensive than regular LLMs. Their effectiveness also tends to decrease in some areas—for example, some fields of healthcare, where ambiguity and context make definitive answers less straightforward.

Quantum computing is also a transformative prospect. Once quantum computers successfully resolve error-correcting issues, they may be able to generate new data from the physical world, which can then be leveraged within LLMs, potentially providing a much richer array of information for training.

Finally, hardware innovations in areas such as photonics—which uses light to transfer data—were cited as a promising area of development. Photonic interconnects, per some speakers, aim to reduce energy consumption by up to 70%, improving the reliability and performance of AI clusters.

This material is provided in conjunction with the associated video/audio content for convenience. The content of this material may differ from the associated video/audio and Goldman Sachs is not responsible for any errors in the material. The views expressed in this material are not necessarily those of Goldman Sachs or its affiliates. This material should not be copied, distributed, published, or reproduced, in whole or in part, or disclosed by any recipient to any other person. The information contained in this material does not constitute a recommendation from any Goldman Sachs entity to the recipient, and Goldman Sachs is not providing any financial, economic, legal, investment, accounting, or tax advice through this program or to its recipient. Neither Goldman Sachs nor any of its affiliates makes any representation or warranty, express or implied, as to the accuracy or completeness of the statements or any information contained in this material and any liability therefore (including in respect of direct, indirect, or consequential loss or damage) is expressly disclaimed.

© 2025 Goldman Sachs. All rights reserved.

Our signature newsletter with insights and analysis from across the firm

By submitting this information, you agree that the information you are providing is subject to Goldman Sachs’ privacy policy and Terms of Use. You consent to receive our newsletter via email.